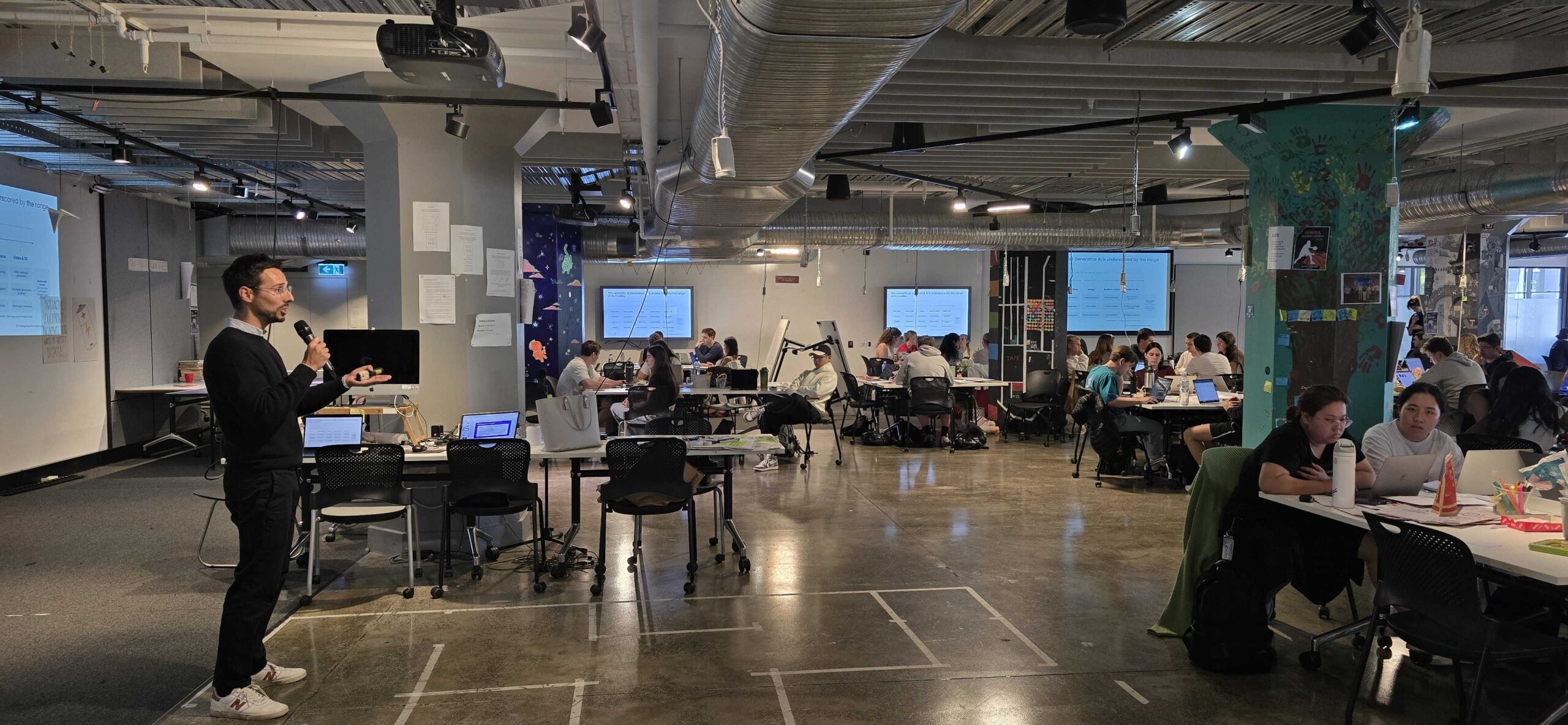

While the concept of Artificial Intelligence (AI) is not new, it is important to recognise that the qualities of emotional intelligence such as “empathy” need to be the driving force behind the next generation in AI, as humans will be drawn to human-like interaction. There’s always been something…“off” about AI that sits on the border of an almost-but-not-quite human. Is that the computerised voice, or the slightly sociopathic mimicking of facial emotions? The burning question is can “empathy” be a consideration in AI and will machines actually have the ability to display and predict emotions? Advancements in Machine learning and Artificial Intelligence have accelerated exponentially in the past 10-15 years. In the past 5 years, this has exploded even further with the availability of deep learning and data. The accuracy and the predictions of automated machines are becoming more reliable, more autonomous and producing more intelligent tools and technologies than ever before. With the increasing developments in computation and processing power, are we at a stage where AI can mimic human behaviour? Can machines now have human-like emotions and empathise? Empathy is the ability to feel another person’s emotions, to ‘put ourselves in someone else’s shoes’. As a consumer research specialist, understanding customer’s desires, motivations and responses places us in a position to understand and answer unmet needs. An intrinsic part of understanding these needs and desires is to empathetically engage with our customers, intuitively connecting with their stories and experiences to really understand what motivates them to do what they do and feel how they feel.

Can mathematical tools define emotional responses?

Emotions are quite different from one person to another; does AI have the ability to create patterns to take these emotions and tease them apart and rebuild them into operational parts to form one universal model? Can we imitate a smile or imitate anger? Before you consider the mathematical algorithm, there are 2 examples I‘d like to share… First, during a consulting project, I was requested to undergo an EQ online test (think about that – an online evaluation of emotions without empathy or face to face interaction). A series of questions, static images and faces were presented, the idea was to reflect whether the individual depicted was ‘anxious’, ‘sad’ or ‘elated’ etc for each stimulus. In the evaluation, I was surprised to see abstract images of bold, bright, orange and red colours – to which I associated to be ‘vibrant’ rather than associating with a more stereotypical response such as ‘anger’. I could have answered ‘anger’ but I’m a creative innovation specialist so bright colours for me are more readily linked to energy and excitement. Context is everything! Secondly, while on a holiday in France a few years ago, in a busy country market, I became separated from my 3 years old daughter. While I frantically looked for her up and down the market, she had been led out of the market into a piazza in the opposite direction. During, this stressful period, I was unable to communicate in French and the locals unable to correspond in English. After about 10 mins through speaking broken French and English, a local approached me, the expression on my face was fretful and full of despair, knowing this she firmly indicated for me to go down a path. Shortly after I was reunited with my daughter, who was in the safe hands of a Policeman – she was crying. The moment she held my hand she stopped crying and I started crying. This is a complex emotional situation, so what algorithm would you model as my emotional response?

If A computer emulates crying is this an emotion?

I think it’s an interesting point because the more efficient, accurate and smarter machines become at identifying patterns and predictions, do we define this process as a function of human learning or artificial learning? Where is the intersection between the two? We can also extend this postulation to other human stimuli, for example, the robots that would be painters. We’ve heard of AI-influenced art (artwork that has been created by AI), which has been created from thousands of images to develop an algorithm. If a computer creates art, is it Art? I was reading through an interview with Danielle Krettek, the Founder and Principal of Google’s Empathy Lab, where she leads a team attempting to train empathy into Google’s algorithms. To quote her, she said: “I think that when it comes to the magic and mystery of emotion, I think you can look at the idiosyncrasies of the dance of emotion in a person and think that there’s no pattern in that. But in truth, we all do have our patterns—like literally there are emotional rhythms and emotional tendencies. So, I think if we allow machines to observe us long enough, they’ll probably be able to mimic us very convincingly. But my personal opinion is that the real emotional connection—that real empathic connection, and the idea of being self-aware—I think is a uniquely human thing.” Which leads me to my last question for your consideration… What algorithm can truly capture our complex human consciousness to imitate the warmth of a human heart, touch and speak? Do you think we could ever reach a point in which machines could truly experience real empathy, or will we have to make do with a high-level imitation?